Digitization gives you access to data locked in traditional paper-based systems that you can use for analytics and data-driven decision-making. Unfortunately, organizations face two main hurdles in digital adoption.

Firstly, data must be in the form of text and numbers to be useful for analysis. Simply scanning paper forms, invoices, and other documents is not enough. All it does is create an extensive database of document images unusable by business intelligence tools.

Secondly, manual data entry from paper (or scanned images) is tedious, time-consuming, and costly. Data from a paper-based invoice could take weeks to reach analysis and cannot be used for real-time decision-making.

Machine learning OCR is a solution to these challenges. Optical Character Recognition (OCR) is a popular technology that automatically extracts text from document images and converts it into a machine-readable format. You can integrate modern OCR solutions with your analytics for real-time insights into your processes.

Let's look into what machine learning OCR is, how it works, challenges, and how the technology has progressed into deep learning OCR.

What is machine learning OCR?

Machine learning OCR is a technology that uses machine learning algorithms to recognise and extract text from images or scanned documents. While this task is easy for humans, it is very complex for software.

For software, any image is a set of pixels with different colours, grayscale, and other attributes. Machine learning OCR tools have to identify the pixel groups which together form the shape of the English alphabet. The task is made even more challenging because of factors like:

- Varying font sizes and shapes

- Handwritten text with different writing styles

- Blurry or low-quality images

- Multiple text blocks in various parts of the image

However, machine learning OCR technologies solve the problem using pre-trained models (or algorithms) to scan the image and recognize patterns and features. Data scientists train the models using large amounts of labeled data (or images with associated answers).

The model uses statistical techniques to correlate known pixel groups with text. They can then recognize patterns and features in an unknown image and "guess" the text accurately.

Let's look at an example to understand the concept in very simple terms. Suppose you give a series of pairs to a machine learning algorithm—(1, a) (2, b) (3, c). Then I ask it to guess the pair for the input—4.

The algorithm will output "d" because it has made a connection that numbers are related to the numerical position of the alphabet. Similarly, the algorithm makes connections between pixel groups, their attributes, and associated text or numbers.

How does machine learning OCR work?

In simple words, machine learning OCR scans an image and recognizes patterns using pre-trained models, and then “rewrites” the text from what it “read.” This process happens in several steps:

- data pre-processing

- text localisation

- text recognition

- post processing

Data preprocessing

As a first step, most OCR technologies will preprocess the scanned image using techniques like resizing, normalization, and noise reduction to enhance the quality of the input data. For instance, the system may:

- despeckle or remove any spots

- deskew or tilt the scanned document slightly to fix alignment issues

- smooth the edges of the text

- clean up lines and boxes in the image.

While this step is technically not OCR, it is a critical part of the text extraction process.

Text localisation

The next task is to locate the image areas containing text. Text regions often have distinct edge information like lines, loops, and contours. Additionally, scanned documents may have distinct "objects" or other images mixed in (like a company logo on an invoice document).

Text localization uses techniques like edge detection, object detection, and contour analysis to separate text from other types of images.

Text Recognition

Once the machine learning OCR system has found the text regions, it decomposes that specific image area to identify individual letters and words. At this stage, individual characters are called "glyphs." To identify a glyph, the system may match it to a previously stored glyph or look for loops, crosses, and dots to "guess" the letter from its unique patterns.

This is especially challenging if you are trying to convert handwriting to text in a digital format.

Post processing

Text recognition may have some errors due to variations in fonts, noise, or other factors. Post-processing is used to improve the accuracy of results. In this step, the OCR system uses spelling correction and grammar rules to correct the text.

For instance, it may compare the recognised text against a dictionary or employ statistical methods to check the frequency of different words in the text.

It can also format the recognised text to match a desired output format or style. For instance, it may normalize capitalization, remove unnecessary spaces or punctuation, or apply specific formatting rules for dates, numbers, or other patterns.

Challenges in machine learning OCR

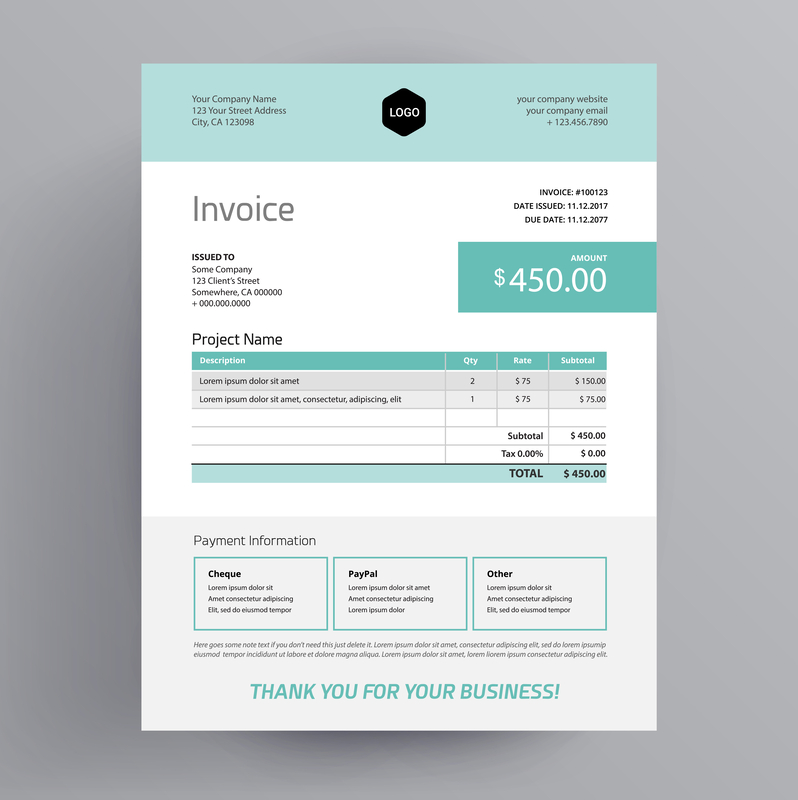

Machine learning OCR is a sophisticated technology, but it just doesn't work well for real-world use cases with significant data variation and increasing data volume. Most of the techniques like contour analysis, edge detection, and pattern recognition work well only for simple, standard document templates. For instance, machine learning OCR is beneficial if all your invoices and paper forms look like below.

You can train it with the above data set and get accurate results for unknown outputs. But in the real world, paper documents vary significantly in layout, text placement, colors, and design. Some formats may have contact details in the top right, while others have them in the bottom left. The date can be in the top right, top left, or sometimes even the middle of the page. All these variations are beyond the scope of machine learning OCR!

ML engineers face a significant challenge due to the ever-expanding range of input data. The complexity arises from the fact that OCR operates at the intersection of two fields:

- Computer Vision (CV) - the field that trains software to perceive and interpret the visual world, similar to how humans do.

- Natural Language Processing (NLP)-the field that trains machines to understand natural human language

Consequently, OCR machine learning models must perform various smaller tasks before achieving their ultimate goal. Given the diversity of text features and applications, ML engineers gravitate towards deep learning as the primary choice for designing an optical character recognition algorithm.

What is deep learning OCR?

Deep learning OCR is the next stage of the development of machine learning OCR. It takes OCR beyond standard templates and rule-based engines to a sophisticated AI solution that analyses scanned documents just like a human would!

Deep learning OCR uses a technology called neural networks. Neural networks are made of hundreds of thousands of interconnected software nodes that communicate with each other while processing data.

Every node in a neural network solves a small part of the problem before passing the data to the next node. The whole network works together to improve OCR accuracy and capability.

Complex neural networks are deep because they have several hidden layers that process data repeatedly over time. Data scientists train the network on various datasets to learn and extract complex text patterns from all types of images.

To be more specific, deep learning OCR uses two main types of neural networks to perform different tasks.

- Convoluted Neural Networks (CNN) for computer vision tasks and

- Recurrent Neural Network(RNN) for NLP tasks.

Convoluted neural networks

CNNs contain convolutional layers that transform the input data before passing it to the subsequent layer. The term "convolving" originates from mathematics, where it refers to the process of combining data. Convolving is done using matrices, filters of the mathematical world. The calculations involved in convolutions are complex, but the underlying concept is akin to a sliding window examining small patches of the image and extracting relevant information.

For example, a filter might look for edges, curves, or textures. Each filter learns to recognise a different aspect of the image, and by combining the outputs of these filters, the network gains a deeper understanding of the overall image.

Recurrent neural networks

RNNs are neural networks with nodes that have a memory-like component. It allows the nodes to remember past information as they process new inputs.

RNNs analyze text one character at a time, considering the surrounding characters to make predictions or infer missing details. They recognise the context of the text, such as capturing dependencies between characters and words.

For instance, in OCR, RNNs can predict the next character in a word based on the characters processed so far, or they can identify specific words or phrases based on the preceding text. This capability of RNNs is especially valuable when dealing with handwritten text, where variations in handwriting, connected characters, or even mistakes can occur.

RNNs can capture these nuances and make informed predictions based on the patterns they've learned from training on large amounts of labeled data.

How does deep learning OCR work?

Deep learning OCR also has preprocessing and post-processing steps like the previous generation of machine learning OCR. But in between, instead of traditional ML models, the data is fed into CNN and RNN systems.

The process works as follows.

Feature extraction

After preprocessing, the data is fed into CNNs. CNNs are primarily responsible for extracting visual features from images or documents. They analyze the input data and capture patterns, edges, textures, and other visual characteristics relevant to OCR.

Once the visual features are extracted, the output from the CNNs is further processed to segment the text into individual characters or words. This step involves identifying boundaries or separating different text regions within the image or document. Accurate segmentation is crucial for enabling proper recognition in subsequent stages.

Contextual analysis

The segmented characters or words are then fed into the RNN part of the OCR system. RNNs, with their sequential memory, analyze the characters or words sequentially, considering the context and dependencies between them. This allows the system to understand the text's meaning, capture language patterns, and improve recognition accuracy.

Integrating visual feature extraction through CNNs and contextual understanding through RNNs enhances the system's ability to handle various fonts, languages, and document layouts, making it suitable for diverse OCR applications.

Benefits of deep learning OCR

Deep learning OCR gives you all the benefits of machine learning OCR at scale.

Improved efficiency

Deep learning OCR handles large data volumes efficiently, making it scalable for organizations with high document volumes. The integration of CNNs and RNNs allows a better understanding of text context and improves recognition accuracy, even in challenging scenarios. End-to-end processing streamlines the workflow and eliminates the need for separate tools or modules, making the OCR pipeline more convenient.

Increased flexibility

Deep learning OCR handles various fonts, languages, and document layouts, making it highly flexible and adaptable. It excels in processing complex documents that contain multiple text blocks, images, or irregular designs. You can use it for text extraction from diverse sources.

Enhanced data analysis

Deep learning OCR handles real-time processing, allowing immediate text recognition and extraction. or requiring fast data processing. You can further integrate the extracted data into your analytics and decision-making processes, unlocking valuable insights and facilitating real-time business intelligence.

Reduced manual data entry

Deep learning OCR systems encompass all the necessary steps, from preprocessing to post-processing, within a single architecture.

It reduces the reliance on manual data entry processes, which are time-consuming, error-prone, and costly. By automating the extraction of text from documents, it significantly reduces the need for human intervention and speeds up data processing tasks.

FAQs about Machine Learning OCR

What is the difference between OCR and machine learning?

OCR is one of the applications of machine learning. Machine learning models are the underlying technology that powers OCR solutions. However, the scope of machine learning is much beyond OCR. Machine learning technologies solve many problems beyond text extraction from images.

Is OCR considered AI?

OCR is one of the applications of AI technologies. All OCR solutions are not considered AI. Some of them are rule-based and use older algorithms that fall within the category of machine learning - a subset of AI. However, advanced OCR solutions use AI to deliver faster and more accurate results for various images.

Is OCR software or hardware?

OCR solutions are software-based, and you can integrate them into any existing application. You don't require specialized hardware to run the solution. The OCR provider has ready solutions for invoice extraction, ID verification, or general image-to-text conversion. You just call the service in your code as an API or use it directly from your browser.

Which programming language is best for OCR?

Python and built-in Python OCR libraries are suitable for building OCR solutions from scratch. However, it is more advantageous to use an API-based pre-trained, fully managed OCR platform. The OCR provider trains, maintains, and deploys the service; all you have to do is call it in your code. The neural networks are more sophisticated than any in-house development.

In the process of digital transformation, machine learning OCR and deep learning OCR are vital allies. They open the gateways so information flows freely and becomes more accessible and valuable for the whole organization.

Traditional machine learning OCR began by preprocessing images, then identifying and recognising the text using rule-based algorithms. However, the algorithms faced limitations in the range and volume of document images they could process.

Machine learning OCR evolved into deep learning OCR that uses different types of neural networks to improve the text extraction process. Convoluted neural networks identify different image regions and text blocks. Recurrent neural networks identify the words and derive meaning from them. Together, they convert scanned document images to analyzable data with the accuracy of a human but at a much faster speed!

Get started with advanced deep learning OCR, and unleash the power of your data. Embrace the marvels of neural networks, and let the transformation begin!